IDEA配置hadoop开发环境及操作HDFS演示

hadoop基础环境搭建请看上篇(非高可用)IDEA Maven配置1. 下载maven下载地址从官网上,下载一个压缩包,然后解压到任意的文件夹Maven的安装必须需要jdk1.7+2. 环境变量设置M2_HOME改为具体的路径,其他的就直接复制就好M2_HOME=D:\maven\apache-maven-3.6.3-bin\apache-maven...

·

hadoop基础环境搭建请看上篇(非高可用)

IDEA Maven配置

1. 下载maven

下载地址

从官网上,下载一个压缩包,然后解压到任意的文件夹

Maven的安装必须需要jdk1.7+

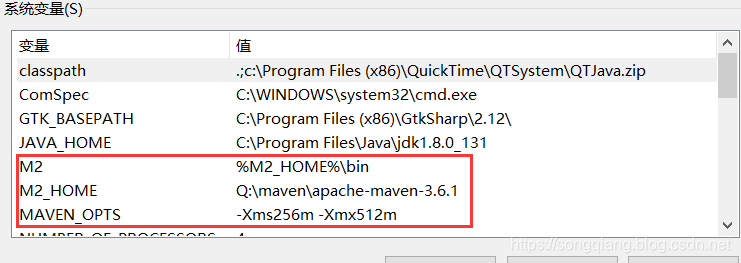

2. 环境变量设置

M2_HOME改为具体的路径,其他的就直接复制就好

M2_HOME=D:\maven\apache-maven-3.6.3-bin\apache-maven-3.6.3

M2=%M2_HOME%\bin

MAVEN_OPTS=-Xms256m -Xmx512m

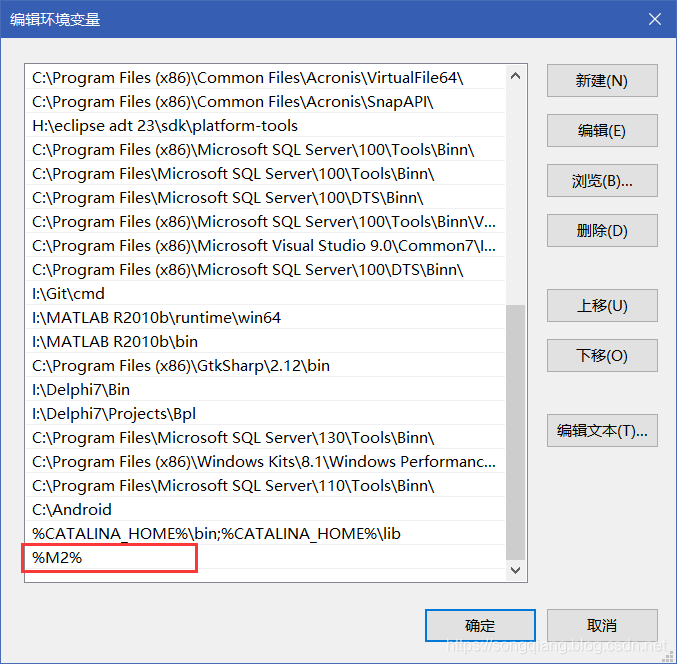

Path目录下添加以下

%M2%

打开cmd,输入mvn --version,出现以下类似以下结果,则配置成功

Apache Maven 3.6.1 (d66c9c0b3152b2e69ee9bac180bb8fcc8e6af555; 2019-04-05T03:00:29+08:00)

Maven home: Q:\maven\apache-maven-3.6.1\bin\..

Java version: 1.8.0_131, vendor: Oracle Corporation, runtime: C:\Program Files\Java\jdk1.8.0_131\jre

Default locale: zh_CN, platform encoding: GBK

OS name: "windows 10", version: "10.0", arch: "amd64", family: "windows"3. 配置镜像和下载jar目录

打开maven目录下的conf/settings.xml

找到mirrors标签,在其下面复制下面的代码

<mirror>

<id>alimaven</id>

<name>aliyun maven</name>

<url>http://maven.aliyun.com/nexus/content/groups/public/</url>

<mirrorOf>central</mirrorOf>

</mirror>

<mirror>

<id>repo2</id>

<mirrorOf>central</mirrorOf>

<name>Human Readable Name for this Mirror.</name>

<url>http://repo2.maven.org/maven2/</url>

</mirror>

<mirror>

<id>ui</id>

<mirrorOf>central</mirrorOf>

<name>Human Readable Name for this Mirror.</name>

<url>http://uk.maven.org/maven2/</url>

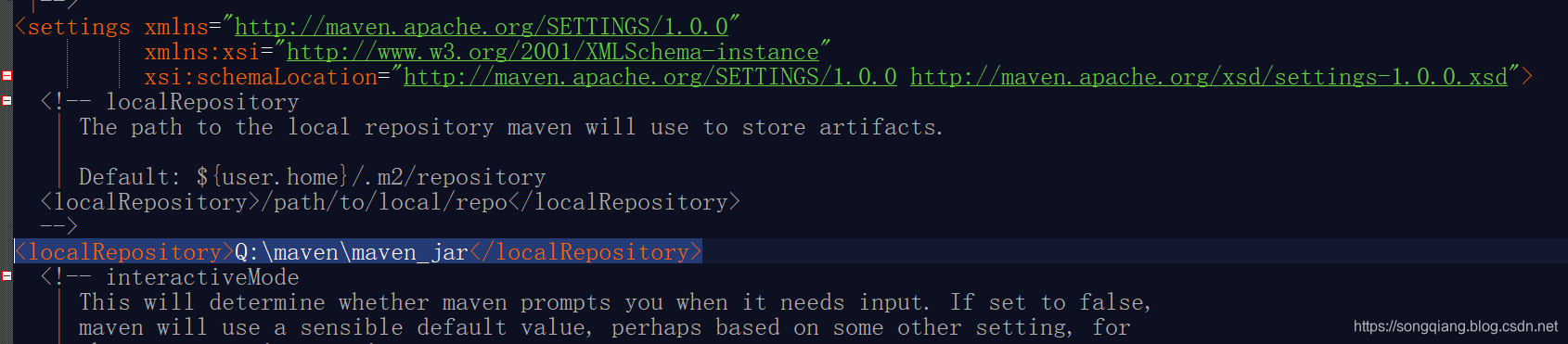

</mirror>找到localRepository标签,在里面填写jar包下载的目录,不写的话默认就会下载到c盘

<localRepository>Q:\maven\maven_jar</localRepository>

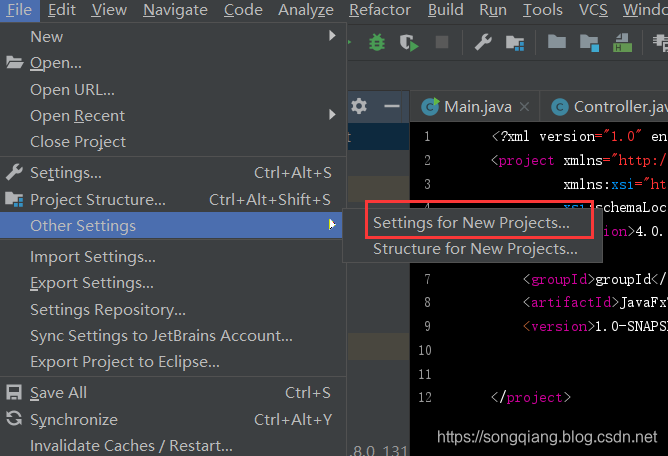

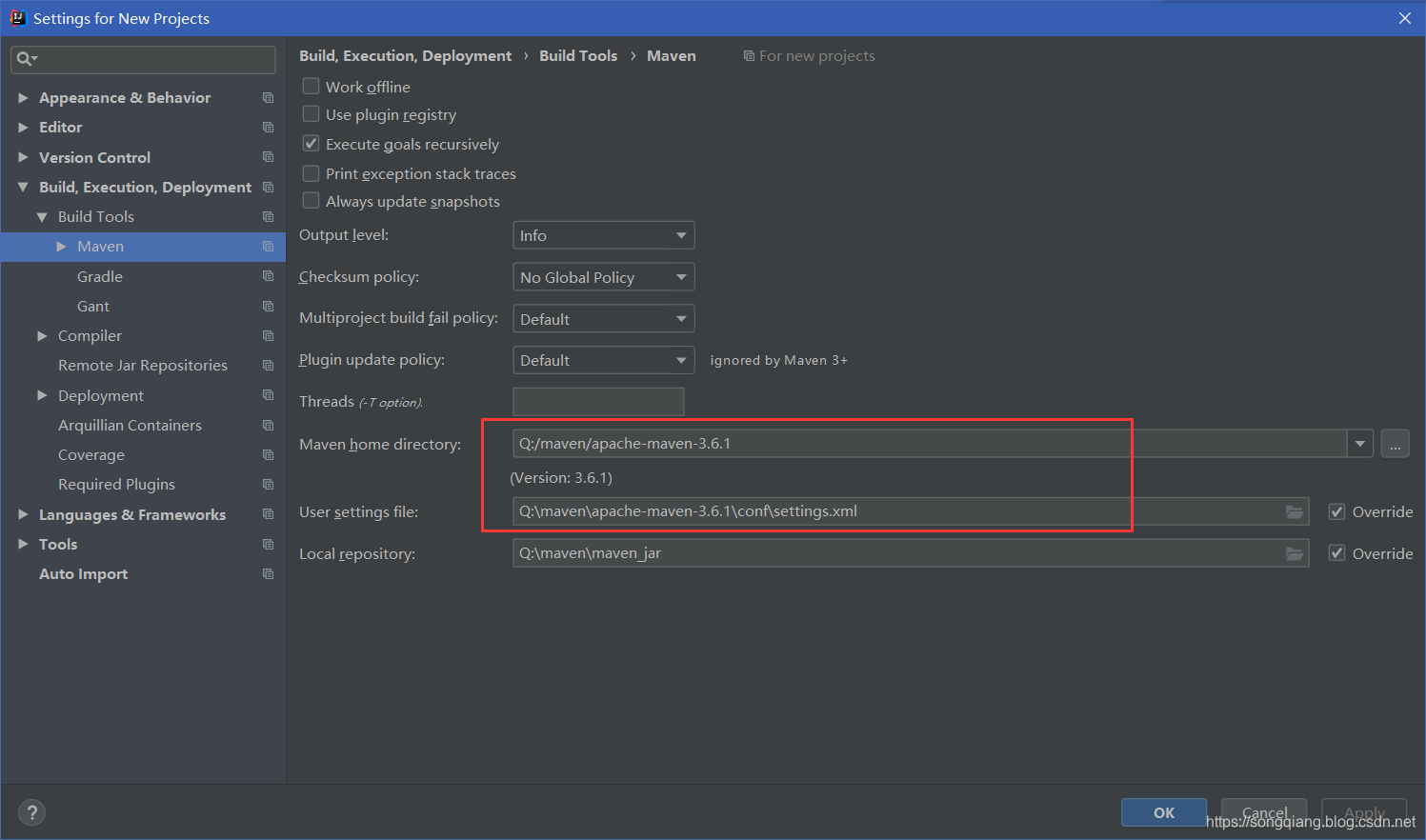

4. IDEA设置Maven

修改默认设置,只对于新建的项目,设置才会有效

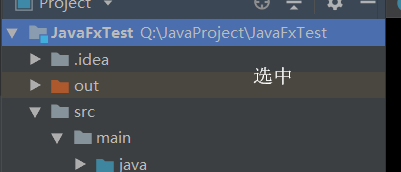

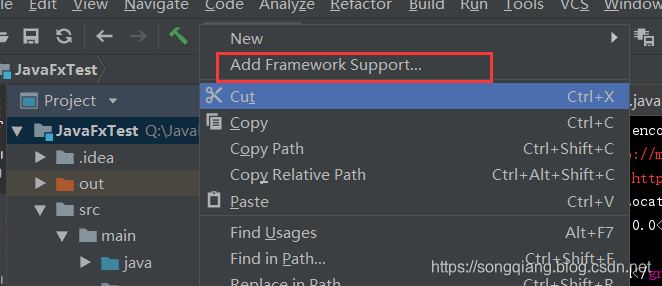

为当前项目添加Maven

在pom.xml文件里添加hadoop的依赖包hadoop-common, hadoop-client, hadoop-hdfs

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.bigdata.master</groupId>

<artifactId>hadoop</artifactId>

<version>0.0.1-SNAPSHOT</version>

<dependencies>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-common -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.7.3</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-hdfs -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.7.3</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-client -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.7.3</version>

</dependency>

<!-- https://mvnrepository.com/artifact/junit/junit -->

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

</dependency>

</dependencies>

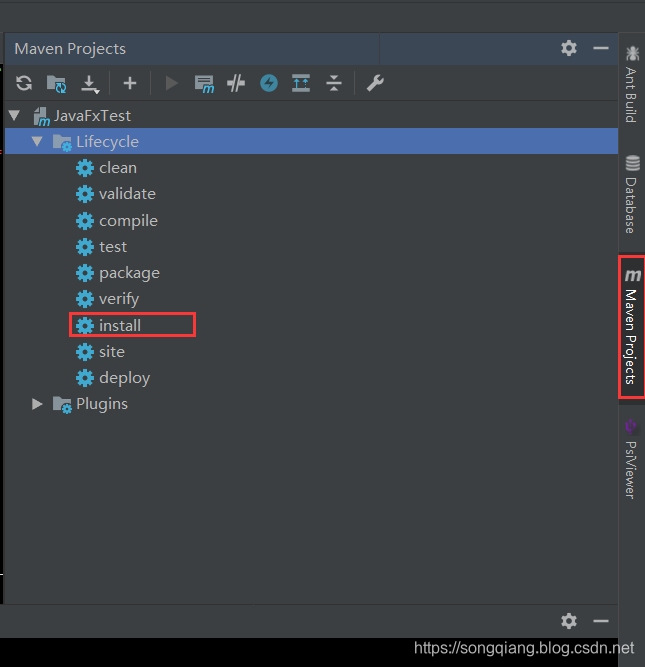

</project>在出现的选项中选择maven,选择右边的maven选项,点击install,idea就会自动下载一些jar

5、测试

代码:

package hdfs.demo;

import java.io.File;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.OutputStream;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

public class main {

public static void main(String[] args) throws IOException{

//查看

catFile();

//创建

//mkdir();

}

public static void catFile() throws IOException {

//获取配置文件对象

Configuration conf = new Configuration();

//添加配置信息

conf.set("fs.defaultFS","hdfs://master:9000");

//获取文件系统对象

FileSystem fs = FileSystem.get(conf);

//操作文件系统

FSDataInputStream fis = fs.open(new Path("/tmp/hello"));

//读取文件输出到控制台

IOUtils.copyBytes(fis, System.out, conf, true);

//关闭文件系统

if(fs != null) {

fs.close();

}

}

public static void mkdir() throws IOException {

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://master:9000");

FileSystem fs = FileSystem.get(conf);

Path outputDir=new Path("/tmp/first");

if(!fs.exists(outputDir)){//判断如果不存在就创建

fs.mkdirs(new Path("/tmp/first"));

System.out.println("文件创建成功!");

}else {

System.out.println("文件路径已经存在!");

}

fs.close();

}

}

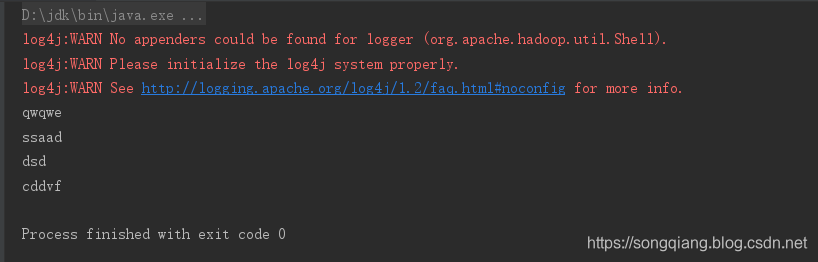

结果:

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)