scala运行异常Exception in thread “main“ java.lang.NoSuchMethodError: scala.Predef$

使用intelli idea +scala+spark,运行程序代码如下:package big.data.analyse.mllibimport org.apache.spark.{SparkConf, SparkContext}import org.apache.spark.mllib.fpm.FPGrowthobject FP {def main(args: Array[String]) {

·

使用intelli idea +scala+spark,运行程序代码如下:

package big.data.analyse.mllib

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.mllib.fpm.FPGrowth

object FP {

def main(args: Array[String]) {

val conf = new SparkConf().setMaster("local").setAppName("FP") //设定名称

val sc = new SparkContext(conf) //创建环境变量实例

val data_path = "D:\\下载\\2.sample.txt"

val data = sc.textFile(data_path)

val examples = data.map(_.split(" "))

val minSupport = 0.2

val model = new FPGrowth().setMinSupport(minSupport).run(examples) //打印结果

println(s"Number of frequent itemsets: ${model.freqItemsets.count()}")

//输出满足最小置信度的关联规则及置信度

model.generateAssociationRules(0.8).collect().foreach

{

rule =>

println("[" + rule.antecedent.mkString(",")

+ "=>"

+ rule.consequent.mkString(",") + "]," + rule.confidence)

}

//输出所有的频繁项

model.freqItemsets.collect().foreach {

itemset =>

println(itemset.items.mkString("[", ",", "]") + ", " + itemset.freq)

}

}

}}

运行时,出现如标题显示的异常

问题根源:版本不一致,spark自带的scala版本

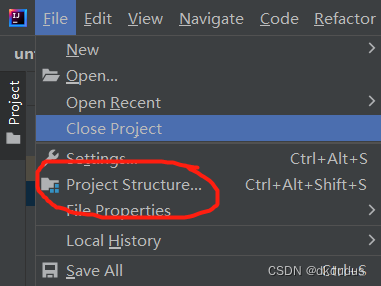

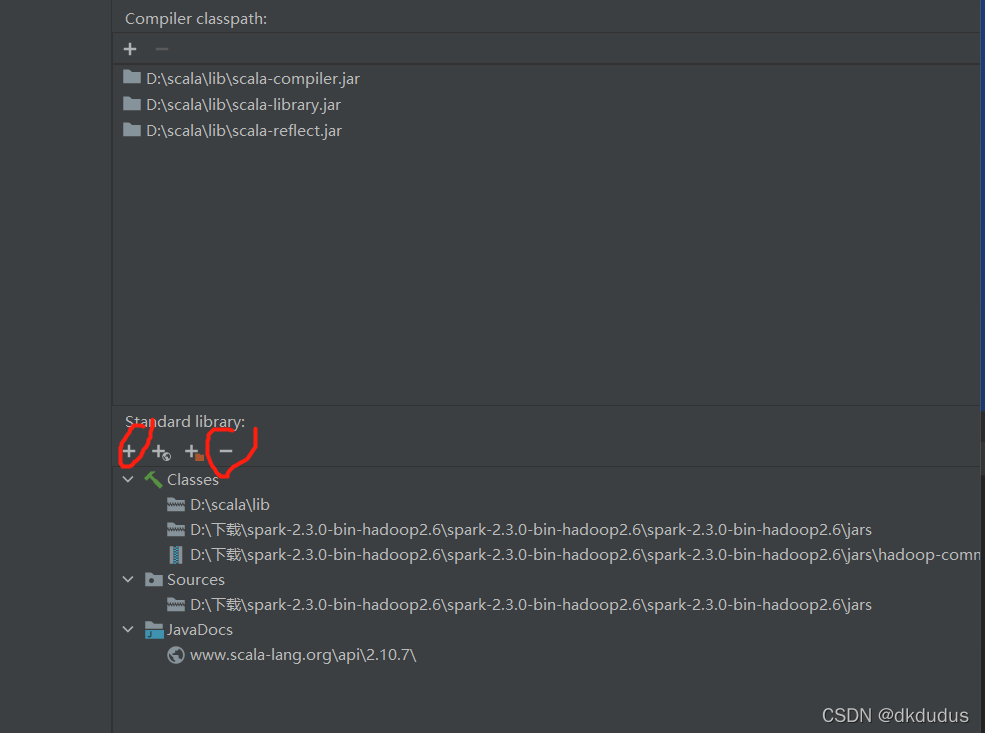

解决方案:

删除原来系统安装下版本,导入spark自带的版本; 然后再运行就可以了;

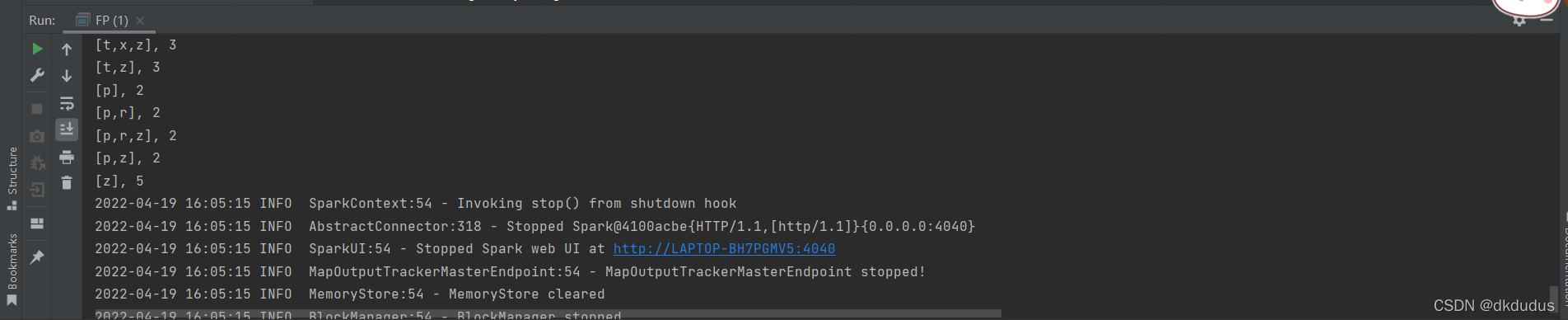

运行结果:

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)