使用colab下载大模型基模的时候报错处理

解决方案:安装依赖的时候用pip3。

·

报错明细

User

ImportError Traceback (most recent call last)

<ipython-input-8-bcbf3706e26d> in <cell line: 24>()

22 )

23

---> 24 model = transformers.AutoModelForCausalLM.from_pretrained(

25 model_id,

26 trust_remote_code=True,

2 frames

/usr/local/lib/python3.10/dist-packages/transformers/quantizers/quantizer_bnb_4bit.py in validate_environment(self, *args, **kwargs)

60 def validate_environment(self, *args, **kwargs):

61 if not (is_accelerate_available() and is_bitsandbytes_available()):

---> 62 raise ImportError(

63 "Using `bitsandbytes` 8-bit quantization requires Accelerate: `pip install accelerate` "

64 "and the latest version of bitsandbytes: `pip install -i https://pypi.org/simple/ bitsandbytes`"

ImportError: Using `bitsandbytes` 8-bit quantization requires Accelerate: `pip install accelerate` and the latest version of bitsandbytes: `pip install -i https://pypi.org/simple/ bitsandbytes`

---------------------------------------------------------------------------

NOTE: If your import is failing due to a missing package, you can解决方案:安装依赖的时候用pip3

!pip3 install transformers==4.31.0 tokenizers==0.13.3

!pip3 install einops==0.6.1

!pip3 install -i https://pypi.org/simple/ bitsandbytes

!pip3 install accelerate

!pip3 install xformers==0.0.22.post7

!pip3 install langchain==0.1.4

!pip3 install faiss-gpu==1.7.1.post3

!pip3 install sentence_transformers再次加载就报错了

from torch import cuda, bfloat16

import transformers

model_id = 'meta-llama/Llama-2-7b-chat-hf'

device = f'cuda:{cuda.current_device()}' if cuda.is_available() else 'cpu'

# set quantization configuration to load large model with less GPU memory

# this requires the `bitsandbytes` library

bnb_config = transformers.BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type='nf4',

bnb_4bit_use_double_quant=True,

bnb_4bit_compute_dtype=bfloat16

)

# begin initializing HF items, you need an access token

hf_auth = 'xxxxxx'

model_config = transformers.AutoConfig.from_pretrained(

model_id,

use_auth_token=hf_auth

)

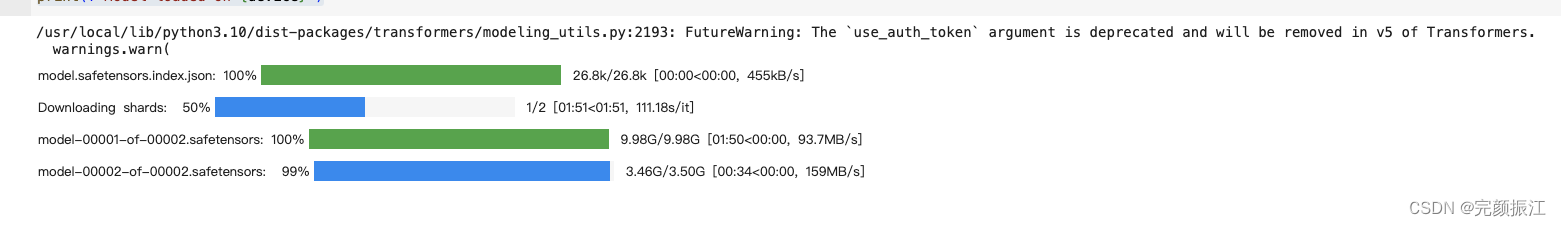

model = transformers.AutoModelForCausalLM.from_pretrained(

model_id,

trust_remote_code=True,

config=model_config,

quantization_config=bnb_config,

device_map='auto',

use_auth_token=hf_auth

)

# enable evaluation mode to allow model inference

model.eval()

print(f"Model loaded on {device}")

更多推荐

已为社区贡献29条内容

已为社区贡献29条内容

所有评论(0)